Artificial intelligence (AI) is changing how students learn, how staff work, and how institutions operate. The question for leaders is not whether AI will impact your college, but how well prepared you are.

Where AI is left to chance, staff and students adopt tools in fragmented ways, risks increase, and opportunities are missed. Where AI is guided by clear frameworks, colleges build trust, save time, enrich teaching, and give learners valuable skills for their futures.

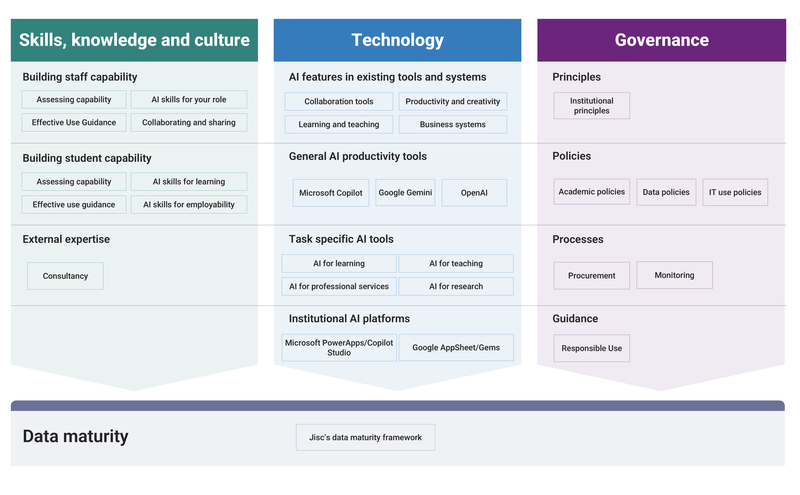

That is why our framework sets out three pillars of responsible adoption:

- Skills, knowledge and culture

- Technology

- Governance

These are all underpinned by strong data maturity.

Open a larger version of the three pillars infographic (png).

Alt text for the three pillars infographic

Skills, knowledge and culture

Building staff capability

- Assessing capability

- AI skills for your role

- Affective use guidance

- Collaborating and sharing

Building student capability

- Assessing capability

- AI skills for your role

- Affective use guidance

- AI skills for employability

External expertise

- Consultancy

Technology

AI features in existing tools and systems

- Collaboration tools

- Productivity and creativity

- Learning and teaching

- Business systems

General AI productivity tools

- Microsoft Copilot

- Google Gemini

- OpenAI

Task specific AI tools

- AI for learning

- AI for teaching

- AI for professional services

- AI for research

Institutional AI platforms

- Microsoft PowerApps/Copilot Studio

- Google AppSheet/Gems

Governance

Principles

Institutional principles

Policies

- Academic policies

- Data policies

- IT use policies

Processes

- Procurement

- Monitoring

Guidance

- Responsible use

Data maturity

- Jisc's data maturity framework

Skills, knowledge and culture

Building staff capability

- Assessing capability

- AI skills for your role

- Affective use guidance

- Collaborating and sharing

Building student capability

- Assessing capability

- AI skills for your role

- Affective use guidance

- AI skills for employability

External expertise

- Consultancy

Technology

AI features in existing tools and systems

- Collaboration tools

- Productivity and creativity

- Learning and teaching

- Business systems

General AI productivity tools

- Microsoft Copilot

- Google Gemini

- OpenAI

Task specific AI tools

- AI for learning

- AI for teaching

- AI for professional services

- AI for research

Institutional AI platforms

- Microsoft PowerApps/Copilot Studio

- Google AppSheet/Gems

Governance

Principles

Institutional principles

Policies

- Academic policies

- Data policies

- IT use policies

Processes

- Procurement

- Monitoring

Guidance

- Responsible use

Data maturity

- Jisc's data maturity framework

What a mature AI-enabled college looks like

When all three pillars are in place your college can:

- Provide learners and staff with trusted, consistent toolkits

- Build staff and student confidence through structured training

- Govern AI responsibly, balancing innovation with assurance

- Benefit from faster workflows, strengthen learning and improve readiness for the workplace

Pillar one: Skills, knowledge and culture

Across the sector, colleges are beginning to benefit from introducing AI, but adoption is uneven. The technology itself is often not the barrier - it’s staff confidence. Where staff feel unsure or excluded, AI tools are underused, misapplied, or even resisted. Where staff feel supported and trusted, AI is saving time, enriching learning, and improving the student experience.

Our framework for AI puts skills, knowledge and culture alongside technology and governance as one of the three pillars of responsible AI adoption. For college leaders, this means investing in staff capability and learner AI skills is not a “nice to have”. It is the difference between AI making a real impact and AI remaining a missed opportunity, the difference between learners being ready for the AI infused future or not.

Be strategic: build on existing resources

There’s no need to start from scratch when building capability to provide your staff and learners with the AI skills they need to thrive.

We have designed a structured AI literacy curriculum that gives all staff a baseline of understanding, practical application, and ethical awareness. It is delivered online or as modules that can be embedded into continuing professional development (CPD), and can be used as foundation for developing your own internal staff development programme.

There’s no need to start from scratch when building capability to provide your staff and learners with the AI skills they need to thrive.

When staff have the skills and confidence they need, they can work towards the best way to incorporate AI in their teaching. There’s no universal approach – the detail very much depends on the discipline.

Of course, no one agency has all the answers, and this is where communities come in. Our AI community provides a way to learn from others, to share, and to avoid reinventing the wheel.

Core AI skills for staff

Our recommended approach to staff training starts with assessing current capability. We then recommend providing paths to building AI skills specifically for their role through structured training, guidance to frame your college approach to responsible use of AI, and of course, opportunities to collaborate, share and learn from others.

| Assessing capability | Responsible AI guidance | AI skills for your role | Collaborating and sharing |

|---|---|---|---|

| Benchmark current digital skills and identify strengths and gaps. | Offer clear, accessible guidance on responsible AI use. | Provide structured AI training tailored to role and experience. | Encourage staff to learn from peers and sector-wide practice. |

|

|

|

|

Core AI skills for learners

The approach for learners is like staff – it starts with assessing capability and providing guidance on responsible AI. It differs though on skills – learners have two distinct needs: AI for learning and AI for employability. The former can be centrally provided in the same way other study skills are, but the latter needs to be tailored to the discipline and embedded in the course.

| Assessing capability | Responsible AI guidance | AI skills for your role | Collaborating and sharing |

|---|---|---|---|

| Benchmark current digital skills and identify strengths and gaps. | Offer clear, accessible guidance on responsible AI use. | Provide all students with access to AI awareness and literacy training linked to academic integrity and study skills. | Integrate subject-specific AI use into course to connect learning with future careers. |

|

|

|

|

The payoff

When staff are AI-confident, the college gains in multiple dimensions. Lessons can be planned and prepared more efficiently, feedback is delivered more consistently, administration time is reduced, and students see AI being modelled in responsible, thoughtful ways. Most importantly, the college builds a culture that is open to innovation but grounded in good practice. Every investment in technology and governance is underpinned by capable, confident people.

Quick checklist for college leaders

- Is there clear senior accountability for AI capability?

- Do learners and staff say they have access to clear guidance?

- Do all staff have access to Jisc's AI building capability tool?

- Is completion of core AI literacy modules encouraged across the college?

- Are AI sessions built into CPD and induction?

Pillar two: Technology

From lesson planning and marking to student engagement and everyday admin, the right AI tools can save time, support learning, and enhance the student experience.

The challenge: with so many products and promises, which tools can you trust and which add value?

That’s where Jisc comes in. We identify, test, and negotiate licences for AI tools that are safe, effective, and affordable for the sector. We help colleges get early access to innovation and confidence that tools meet educational, ethical, and value-for-money standards.

First: be problem focused

Before thinking about AI tools, focus on the problems and challenges you want to solve, not the AI technology. Tools and technology should be chosen to help with those challenges and AI won’t be the right solution for every problem. With new technology it’s easy to fall into the trap of looking at the amazing technology (and a lot of current AI tools are amazing!) and trying to think about where you make use of them. This is the wrong way round.

In some areas this is straightforward – you want your learners to gain AI skills for the workplace, so it makes sense to focus on the tools that are actually used in the workplace.

But in others – what problem do you want to focus on? What’s the biggest challenge? Do you have a bottleneck in student recruitment? Is your primary challenge staff workload? If so, what aspects? We’ve shared an example of how Jisc identifies potential personal productivity gains with our AI impact workshops.

Be strategic: reuse, partner, not build

It’s almost always better to adopt proven solutions than to build your own. That might mean making use of AI features in software you already license, or working with trusted suppliers, in many cases through Jisc agreements.

Start by looking at what you already have access to. Review existing tools and your suppliers’ roadmaps to understand which AI features you already have access to. Check whether these features can address the problem you want to solve.

Consider where the problem can be solved with a general-purpose AI productivity tool like Microsoft Copilot or Google Gemini. It may well be that the solution is training staff to use these tools.

If neither of these approaches will solve the problem then it’s time to consider partnering with a trusted supplier.

Developing your own AI tools by writing your own code may look appealing, but robust, secure software is costly to develop and requires long-term maintenance. Meanwhile, security threats such as prompt injection and LLM poisoning continue to evolve. This makes developing secure AI tools a challenge too far for colleges.

Developing your own AI tools by writing your own code may look appealing, but robust, secure software is costly to develop and requires long-term maintenance.

Instead, if you need custom workflows, look to the automation tools built into your cloud platform – be that PowerApps if you’re primarily based on Microsoft tools, or AppSheet if your organisation uses Google Workspace. These platforms allow you to safely create tailored solutions that integrate with your existing systems, without the need to manage your own AI models or infrastructure. They also come with built-in security, governance, and compliance controls aligned with your organisation’s cloud environment.

By re-using, buying or partnering, leaders deliver value quickly without exposing colleges to unnecessary risk. And of course, the more ‘industry standard’ tools your learners use, the better equipped they will be for the future.

Core AI tools for learners

Our recommended approach to AI tools for learners is built around four components:

- an industry-standard, general-purpose AI tool

- an AI assistant to help them navigate college life

- tools that directly support their learning and study

- industry-specific AI tools that prepare them for the workplace

A toolkit based on tools piloted or licenses through Jisc would look like this:

| General purpose AI tools | College AI assistant for learners | Tools for learning | Tools for employability |

|---|---|---|---|

| Using industry-standard tools with guided access helps students practise the real-world skills they will use in the workplace. | A 24/7 personal assistant for college life. It answers queries and guides students to resources. | AI tools that support independent study and academic success, strengthening academic outcomes and transferable skills. | Embedding industry-standard tools into courses ensures learners use the same tools as industry in similar ways. |

|

|

|

|

Core AI tools for staff

Our approach for staff has much in common with what we recommend for students – an industry standard general purpose AI tool, a college assistant. We recommend thinking about tools to support teaching and professional services separately, but note that many staff may have elements of both roles, so it’s often not one or the other.

A toolkit based on tools piloted or licenses through Jisc would look like this:

| General purpose AI tools | College AI assistant for staff | Tools for teaching | Tools for professional services |

|---|---|---|---|

| Secure policy aligned use with institutional data safeguards. | A 24/7 personal assistant for college life. It answers queries and guides staff. | AI tools that improve efficiency and enhance ability to support learners. | Supporting administration, support and leadership roles. |

|

|

|

|

The payoff

When colleges adopt a coherent, trusted AI toolkit, the benefits are felt across teaching, learning, and operations. Staff save time on routine work and can focus on higher-value activities. Learners gain confidence working with the same tools they’ll encounter in higher education and the workplace, developing both study skills and employability. Institutions see greater consistency, reduced risk, and stronger alignment between practice and strategy.

By partnering with Jisc, leaders can be confident that they are equipping their college community with safe, sector-approved AI tools that deliver value, efficiency and impact.

Quick checklist for college leaders

- Is adoption being considered based for its impact on workload, quality, and the learner experience?

- Is there clear senior accountability for AI technology choices?

- Does your college provide learners and staff with a consistent, trusted core AI toolkit?

- Are tools integrated into CPD, induction, and curriculum design?

- Do staff and students have clear guidance on responsible use and data security?

- Are you making use of Jisc-negotiated licences and pilots to ensure value for money and early access to innovation?

Pillar three: Governance

Why this matters

Where governance is weak, AI use becomes fragmented, exposing staff and students to risks around data, fairness, and trust. Where governance is strong, AI is explored with confidence, innovation flourishes, and the institution builds credibility with learners, staff, employers and other key stakeholders.

That is why our strategic framework places governance alongside technology and skills as one of the three pillars of responsible AI adoption. Governance isn’t bureaucracy. It’s the foundation that enables safe innovation, protects reputation and ensures AI aligns with institutional values

Be strategic: build on existing governance policies and processes

Whilst AI might feel new, it has in fact been built into many of our tools for a long time. Your existing academic, data and technology policies probably already cover the main points needed, so in most cases you don't need a separate AI policy. Instead, focus on providing clear, user-friendly guidance.

Creating a standalone AI policy can cause confusion. It places responsibility on users to determine whether a feature involves AI — something that is rarely clear or practical. In addition, because most AI-related issues are already addressed in existing policies, creating new ones risks duplication or contradiction.

Creating a standalone AI policy can cause confusion. It places responsibility on users to determine whether a feature involves AI — something that is rarely clear or practical.

The exact policies that need to be reviewed will vary between institutions, depending on their approach to policy development. Broadly, policies to review will include:

- Academic policies, particularly around assessment and malpractice

- Data policies, covering protection and management

- IT use policies, including misuse and approved applications

AI may also highlight issues that have always existed but not been fully considered before. For example, many applications have age restrictions and the rapid rise of generative AI tools with complex and changing age limits has brought this into sharper focus.

Above all, focus on guidance. Provide students with clear information on how they can use AI tools appropriately in their studies and give staff practical guidance on keeping data secure. Help all users ensure that their AI use is responsible, ethical and aligned with your institution’s values.

Core governance activities

Strong governance ensures that AI adoption is responsible, transparent and aligned with institutional values. It provides the policies, oversight and assurance needed to protect students, staff and organisations while enabling innovation.

| Principles | Policies | Procedures | Guidance |

|---|---|---|---|

| A top-level statement on your approach to AI. | Offer clear, accessible guidance on responsible AI use. | Review formal procedures that include the purchase and use of systems with AI. | Expand your AI literacy guidance to include guidance on safe, responsible and ethical use. |

|

|

|

|

The payoff

When colleges establish robust AI governance, the benefits extend across the institution. Staff and students gain clarity and confidence in how AI is used, knowing that clear principles and policies protect their interests. Leaders can innovate safely, assured that risks are managed and reputation is safeguarded. The college builds trust with learners, parents and partners by demonstrating responsible, transparent AI adoption aligned with sector standards. Strong governance is not about bureaucracy—it’s the foundation for safe exploration, effective practice, and lasting credibility in a rapidly evolving landscape.

By adopting our AI governance framework, college leaders can be confident they are enabling innovation while maintaining oversight, compliance and ethical standards.

Quick checklist for college leaders

- Is there clear senior accountability for AI governance and oversight?

- Have you established and published institutional AI principles, aligned with sector guidance?

- Are policies updated to cover acceptable use, data protection, and safeguarding in relation to AI?

- Do staff and students have accessible guidance on responsible and effective AI use?

- Are procurement and vendor assurance processes robust and transparent?

Summary of actions for leaders

The main actions we recommend can be summarised as follows:

- Provide every member of staff with structured development path, including: capability, assessment, training, guidance, and opportunities to share and collaborate. Embed these into CPD, induction, and ongoing professional learning, and evaluate their impact on workload, quality, and confidence.

- Provide every learner with a similar path: AI capability assessment, training for learning and employability, clear guidance on responsible use. Monitor their impact on confidence, engagement, and outcomes.

- Ensure your college adopts a coherent, trusted set of AI tools that meet educational, ethical, and value-for-money standards. Integrate these tools into CPD, induction, and curriculum design so that staff and students can use them confidently, securely, and consistently.

- Establish and publish clear AI principles, update institutional policies, and above all, focus on providing clear and accessible guidance on responsible and effective guidance. Monitor and check that it’s cutting through and working for staff.

Useful resources

- Adobe Firefly – introduction guide to using Adobe Firefly and Adobe license agreement

- Bodyswaps – Bodyswaps pilot report and Bodyswaps license agreement

- FeedbackFruits –FeedbackFruits pilot report (pdf) and FeedbackFruits license agreement

- Google AppSheet – case study about how City College Plymouth achieved award-winning productivity gains

- Graide – Graide pilot report and latest Graide pilot details

- KEATH - KEATH pilot call

- LearnWise – LearnWise pilot report and LearnWise license agreement

- Microsoft PowerApps – see our Jisc cloud services

- TeacherMatic – TeacherMatic pilot report (pdf) and TeacherMatic license agreement