Introduction

In this guide, we focus on elements of learning and assessment design that research tells us are significant in both the higher and further education and skills sectors.

Drawing on interviews with staff in colleges and universities, and a decade of research into technology-enhanced curriculum design, we explore how digital tools can make a difference to the art of learning design.

What is learning design?

Learning design is a considered, creative process that occurs within a wider eco-system of people, processes, systems and places, in which one element is dependent on the others.

As these elements change, and the technologies available to us increase, engaging with an iterative process of creating and redesigning programmes, modules and learning activities becomes ever more important.

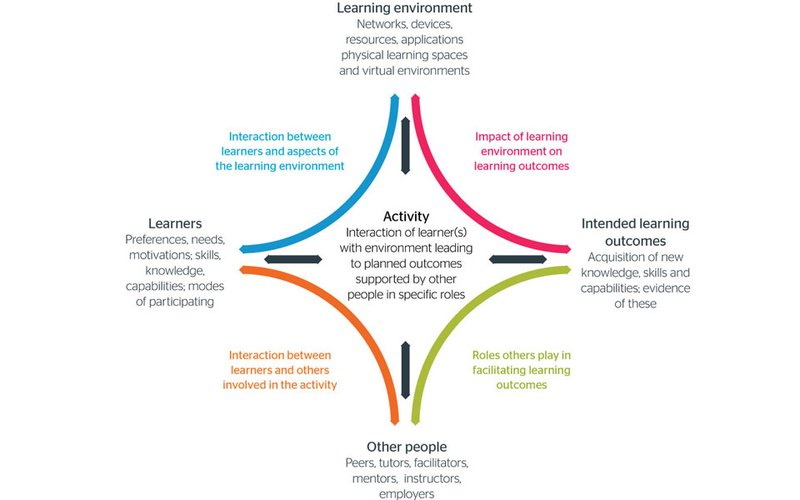

The following diagram, updated from effective practice in a digital age (2007), illustrates the key factors likely to influence this process.

Text version of learning design model diagram

At the centre is activity: interaction of learner(s) with environment leading to planned outcomes supported by other people in specific roles.

There are four points, which feed and are fed by the activity. Each of these four points has a relationship with another. The four points are:

- Learning environments: networks, devices, resources, applications, physical learning spaces and virtual environments

- Learners: preferences, needs, motivations, skills, knowledge, capabilities, modes of participating

- Intended learning outcomes: acquisition of new knowledge, skills and capabilities; evidence of these

- Other people: peers, tutors, facilitators, mentors, instructors, employers

Linking learning environment and intended learning outcomes is impact of learning environment on learning outcomes.

Linking intended learning outcomes and other people is the the roles others play in facilitating learning outcomes.

Linking other people and learners is the interaction between learning and others involved in the activity.

Linking learning environment and learners is interaction between learners and aspects of the learning environment.

At the centre is activity: interaction of learner(s) with environment leading to planned outcomes supported by other people in specific roles.

There are four points, which feed and are fed by the activity. Each of these four points has a relationship with another. The four points are:

- Learning environments: networks, devices, resources, applications, physical learning spaces and virtual environments

- Learners: preferences, needs, motivations, skills, knowledge, capabilities, modes of participating

- Intended learning outcomes: acquisition of new knowledge, skills and capabilities; evidence of these

- Other people: peers, tutors, facilitators, mentors, instructors, employers

Linking learning environment and intended learning outcomes is impact of learning environment on learning outcomes.

Linking intended learning outcomes and other people is the the roles others play in facilitating learning outcomes.

Linking other people and learners is the interaction between learning and others involved in the activity.

Linking learning environment and learners is interaction between learners and aspects of the learning environment.

What is appreciative inquiry?

An appreciative inquiry approach means considering what you already do well and how you might be inspired further by the resources in this guide. In this guide, we use the lens of appreciative inquiry to help you apply what we have learnt in your own context.

As an example of appreciative enquiry in action, here is part of an interview script from Queen’s University Belfast designed to help staff explore new approaches to assessment and feedback:

“Discovering what worked well in the past reminds us that we can bring about positive assessment and feedback experiences for ourselves and our students. Building on these capacities, envision how you can position yourself to embrace assessment and feedback in a more positive way in the future.

Identifying what works, imagine what YOU can do in your modules or the TEACHING TEAM can undertake to improve assessment and feedback for all.”

–Queen’s University Belfast

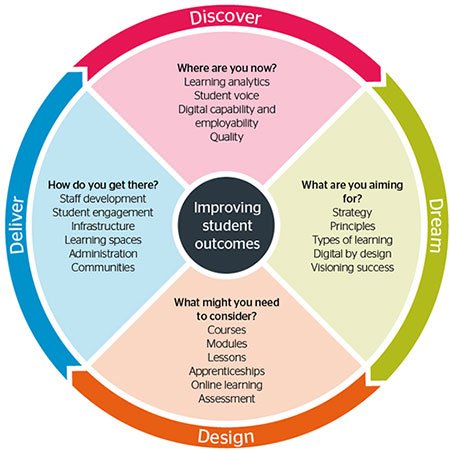

The headings Discover, Dream, Design, Deliver used in our 2018 model of learning design are drawn from the appreciative inquiry approach to change.

Find out more in the appreciative inquiry chapter of our change management guide.

Key supporting work

View our learning design family tree (pdf) for an at-a-glance view of the main outcomes from our past projects and their links to other key developments in learning and assessment design between 2006 and 2014.

Discover

“Pedagogy 'off the shelf’ denies the fact the context must come first. Think about who the people are you are designing for, and what you want to happen. Pedagogy itself is open to differences in terms of personnel, students and context.”

–Peter Shukie, education studies course leader, Blackburn College

Listen to our interview with Peter Shukie, who talked to us about his experience of building technology into the design of learning and assessment.

Download interview transcript (pdf).

When designing learning in a digital environment, or supporting others in doing so, your journey has to start with a clear understanding of where you are now, and what you want to happen.

In order to justify any changes, or to build on current successes, you will need a reliable evidence base.

This section will help you consider:

- Who your learners are, what technology they currently use and for what purposes

- How you gather and utilise information generated by your students

- How well you enable their voice to be heard

- The digital capabilities of your students

- How well your quality processes are responding to learning and teaching in a digital age

Learning analytics

What you need to know

Learning analytics refers to the measurement, collection, analysis and reporting of data about student progress and how the curriculum is delivered.

Students using digital resources and systems generate data that can be analysed to reveal patterns predicting success, difficulty or failure which enable teachers - and students - to make timely interventions.

Importantly for this guide, these metrics can also support a more accurate, data-informed approach to curriculum design.

Already, colleges and universities are using dashboards which provide see-at-a-glance evidence of resource usage and student engagement.

This makes possible a more fluid, dynamic response to curriculum development. Essentially, you don’t need to wait for a distant course review to make any changes that are necessary.

Measures derived from this data can also support the institution’s submission for the Teaching Excellence Framework (TEF), be used in preparation for inspection or form part of routine quality assurance.

The benefits of analytics for staff, students and institutions are becoming clearer, but our research also argues for care over how data is interpreted and presented to staff and students. This is an area where we are still gaining understanding, particularly of the ethics of using student data.

Why analytics matter

With the benefit of analytics, large higher education institutions can spot differences in the amount of teaching and assessments used to deliver learning outcomes of the same credit value on different courses and disciplines. Equally, this kind of data analysis can reveal examples of over-teaching or over-assessment.

A brief overview of learning outcomes can reveal similar discrepancies. Often you find that some learning outcomes on modular courses are assessed multiple times in different parts of the course whilst others are ignored.

Even a review of course information in a virtual learning environment (VLE) can be instructive. Imagine how useful it would be next time you are designing a unit of learning to know which learning activities have been used the most, which have resulted in high achievement, and which presented the greatest difficulty.

Access to this kind of information enables you to improve the design of your programme, course or unit of learning year on year - and to track the impact of any changes you make.

What the experts say

“There is an increasing number of studies using control groups that show that retention and other measures of student success can be positively influenced by the use of learning analytics.”

–Niall Sclater, consultant in learning analytics

Read our 2017 briefing on learning analytics and student success (pdf).

Be inspired: case studies

Ulster University - analytics improve achievement in higher education

Ulster University has used analytics to bring forward the timescale for re-approval of programmes which fall below the institutional or sector average. An example is benchmarking programme outcomes against the sector average for student employment.

The process may be prompted by analytics but it is still a positive one. Staff are encouraged to view any early re-approval and revalidation as a design opportunity that will bring improvements for themselves and their students.

“A new design is likely to bring about better results so it makes sense to concentrate on programmes identified by analysing this kind of data.”

–Paul Bartholomew, pro-vice chancellor (education), Ulster University

Ulster University has used analytics to bring forward the timescale for re-approval of programmes which fall below the institutional or sector average. An example is benchmarking programme outcomes against the sector average for student employment.

The process may be prompted by analytics but it is still a positive one. Staff are encouraged to view any early re-approval and revalidation as a design opportunity that will bring improvements for themselves and their students.

“A new design is likely to bring about better results so it makes sense to concentrate on programmes identified by analysing this kind of data.”

–Paul Bartholomew, pro-vice chancellor (education), Ulster University

Salford city college - analytics embed digital learning

A dashboard application at Salford City College illustrates at a glance how staff are supporting their students with digital learning. This data enables the college to suggest more cost-effective ways of delivering the curriculum.

One of the college’s seven strategic aims is to develop a quality and performance management system that measures the impact of digital learning, provides data that can feed into individual learning plans and enables early intervention when students are at risk.

The college is also considering setting key performance indicators for staff to inform the college’s continuing professional development programme (CPD). Achievement rates from a badge system for digital capabilities is already collated and published for staff in the college’s VLE, Canvas.

“The college is still on a journey towards a fully digital approach to learning and teaching, but the potential is there. We have at least made a start by showing staff how analytics can make a difference to the way they work.”

–Deborah Millar, formerly director for digital learning and information technology services, Salford City College

A dashboard application at Salford City College illustrates at a glance how staff are supporting their students with digital learning. This data enables the college to suggest more cost-effective ways of delivering the curriculum.

One of the college’s seven strategic aims is to develop a quality and performance management system that measures the impact of digital learning, provides data that can feed into individual learning plans and enables early intervention when students are at risk.

The college is also considering setting key performance indicators for staff to inform the college’s continuing professional development programme (CPD). Achievement rates from a badge system for digital capabilities is already collated and published for staff in the college’s VLE, Canvas.

“The college is still on a journey towards a fully digital approach to learning and teaching, but the potential is there. We have at least made a start by showing staff how analytics can make a difference to the way they work.”

–Deborah Millar, formerly director for digital learning and information technology services, Salford City College

University of Huddersfield - analytics prevent assignment bunching

University of Huddersfield staff are asked to bring average marks from their assignments along to staff development workshops. This helps reveal patterns such as ‘assignment bunching’ when too many assignments are set in the same time period. Interrogating the data has also shown that marks achieved at the end of the year can be 10% lower than at other times.

The university’s work on analytics was aided by a project in 2013 which explored use of assessment data to improve student performance. Its recommendation was that assessment analytics play an important part in learning and teaching, but students need support in interpreting data about their performance.

“Assessment and feedback is a highly emotive and therefore a sensitive issue for students…. Simply providing the data in the form of a dashboard is unlikely to be effective unless students are offered training in its interpretation and accessible strategies to act upon it .”

University of Huddersfield staff are asked to bring average marks from their assignments along to staff development workshops. This helps reveal patterns such as ‘assignment bunching’ when too many assignments are set in the same time period. Interrogating the data has also shown that marks achieved at the end of the year can be 10% lower than at other times.

The university’s work on analytics was aided by a project in 2013 which explored use of assessment data to improve student performance. Its recommendation was that assessment analytics play an important part in learning and teaching, but students need support in interpreting data about their performance.

“Assessment and feedback is a highly emotive and therefore a sensitive issue for students…. Simply providing the data in the form of a dashboard is unlikely to be effective unless students are offered training in its interpretation and accessible strategies to act upon it .”

Get involved

- Sign up for our learning analytics service, email setup.analytics@jisc.ac.uk

- Check your code of practice against our code of practice for learning analytics

- Follow our blog on effective learning analytics

Student voice

What you need to know

Designing effective digital learning experiences depends on knowing as much as you can about your students and their digital confidence and experience. Profiling your students at enrolment helps you understand better:

- Who your students are

- How and where they learn

- How confident they are in using technology

- What access they have to digital technologies

- How they apply those technologies to learning

- What kind of technologies they value most when learning

Partnership approaches

Setting up student-staff partnership roles such as digital ambassadors is another great way of establishing dialogue with your students (see engaging learners section).

A win-win situation for students and their institutions, partnership approaches such as these allow students’ experiences of digital learning to be heard at the same time as improving their employability skills.

Why student voice matters

As well as forming a routine part of market intelligence and data analysis, obtaining students’ views on their use of, and feelings about, technology in learning and assessment should be seen as a vital part of learning design.

Student surveys can show up gaps in parity of provision, for example from campus to campus, whether investment is needed in the infrastructure and whether their learning effectively prepares students for employment in a digital world.

For a really accurate picture of the impact of digital on your students, be sure to gather the views of all students. In general, digital approaches improve access for disabled students, as they do for those living, working and studying remotely, but the effectiveness of digital learning for all students should not be taken for granted.

Nor should it be assumed that all younger students are digitally confident or that those who are ‘tech-savvy’ will make effective use of technology in learning. Listening to what students say yields valuable, sometimes unforeseen, insights into their world, without which your time and effort as learning designers could be undermined.

What the experts say

“Universities and colleges are investing large sums of money on their digital environment in terms of infrastructure, learning materials and supporting their staff with the development of their digital capabilities. But do we know how the investment being made in these areas is impacting on our students’ digital experience?”

–Sarah Knight, Jisc

Read our 2017 student digital experience tracker briefing (pdf).

Be inspired: case studies

University of Lincoln - working in partnership with students

Working in partnership with students to evaluate and enhance the student experience is at the heart of the University of Lincoln’s philosophy. Partnership initiatives at the university include work shadowing between students and the executive team, student representation on interview panels and appointing engagement champions.

The student engagement team also research into the impact of digital learning to better understand what kind of approach works best. Inspired by our report What makes a successful online learner? Jasper Shotts recorded the digital habits of successful learners to develop tips for the next cohort. These activities yield data that is used to create more effective course designs in the future.

“Student digital expectations may be quite different to those of teachers so I want to do a survey on what apps students are using. There are lots of barriers to students being informed consumers in higher education and working with student representatives is a very useful way to find out what these are.”

–Jasper Shotts, principal lecturer, University of Lincoln

Working in partnership with students to evaluate and enhance the student experience is at the heart of the University of Lincoln’s philosophy. Partnership initiatives at the university include work shadowing between students and the executive team, student representation on interview panels and appointing engagement champions.

The student engagement team also research into the impact of digital learning to better understand what kind of approach works best. Inspired by our report What makes a successful online learner? Jasper Shotts recorded the digital habits of successful learners to develop tips for the next cohort. These activities yield data that is used to create more effective course designs in the future.

“Student digital expectations may be quite different to those of teachers so I want to do a survey on what apps students are using. There are lots of barriers to students being informed consumers in higher education and working with student representatives is a very useful way to find out what these are.”

–Jasper Shotts, principal lecturer, University of Lincoln

Jisc digital learner stories - Jade, a further education accountancy student

Our digital student project explored a wide range of students’ expectations and experiences with digital technology, including the experiences of online learners, traditional campus-based and work-based learners. Twelve learners interviewed shared their experiences on video. Jade, a trainee accountant, was one of them.

“Blended learning really helped me because I could do the work at my own pace. I could get through the course at a really quick pace which helped me because I was working at the same time as doing the course.”

–Jade, a level 3 AAT (Association of Accountant Technicians) student, Bradford College

Our digital student project explored a wide range of students’ expectations and experiences with digital technology, including the experiences of online learners, traditional campus-based and work-based learners. Twelve learners interviewed shared their experiences on video. Jade, a trainee accountant, was one of them.

“Blended learning really helped me because I could do the work at my own pace. I could get through the course at a really quick pace which helped me because I was working at the same time as doing the course.”

–Jade, a level 3 AAT (Association of Accountant Technicians) student, Bradford College

Get involved

- Use our four dimensions framework to assist you in setting up successful collaborative partnerships with your students

- Use our benchmarking tool, produced in conjunction with the National Union of Students, to improve the student experience at your institution

- Find out more about the student digital experience tracker project

Supporting guides

- Student digital experience tracker 2017: the voice of 22,000 UK learners

- Insights from our institutional pilots (pdf) - part of our student digital experience tracker

- Key themes from our digital student/learner stories

- Digital student – further education, a report on FE learners’ expectations and experiences of technology

Digital capability and employability

What you need to know

For today’s students, proficiency in the skills of a specific discipline is not the only outcome they need from their courses. For their future employability, students also require a wider skill set that will enable them to thrive in an increasingly digital world.

We refer to this skill set as ‘digital capability’, a broadly based concept covering media and information literacy, digital research and problem-solving, creativity with digital tools as well as routine management of communication and social media tools.

Why digital capability matters

For learning designers, this means building in opportunities for students at all levels and in all disciplines to acquire a wide range of digital skills – this is just as essential for mechanics and beauty therapists as it is for research historians and medical professionals.

Educators equally need to consider what their students will need in the future. Today’s students will have to respond with agility over their lifetimes to shifting labour market requirements and fast-changing developments in technology.

This is a far cry from thinking of employability as a fixed set of skills delivered to students on vocational courses!

What the experts say

"We need to be asking questions such as 'What does it mean to be a nurse?' Being a healthcare professional will mean something completely different in five years’ time. Increasingly nurses are becoming educators and showing people how to monitor their own health and respond to it.”

–Helen Beetham, educational consultant

Be inspired: case studies

Basingstoke College of Technology - becoming digital professionals

As well as making learning more effective, Scott Hayden’s embedded use of social media on the college’s creative media courses helps students become digital professionals long before they go on to employment or higher education.

“My students go on to university and apprenticeships and paid work with the skills employers want - creativity, collaboration, communication, building up a digital reputation.”

–Scott Hayden, digital innovation specialist, Basingstoke College of Technology

As well as making learning more effective, Scott Hayden’s embedded use of social media on the college’s creative media courses helps students become digital professionals long before they go on to employment or higher education.

“My students go on to university and apprenticeships and paid work with the skills employers want - creativity, collaboration, communication, building up a digital reputation.”

–Scott Hayden, digital innovation specialist, Basingstoke College of Technology

Edinburgh College of Art - developing 21st century career-ready graduates

Edinburgh College of Art, an early adopter of the employability agenda, has embedded opportunities for its students to develop career-ready skills and graduate attributes in three ways: through assessed learning outcomes, the design of learning activities and a student-centric approach to curriculum design.

For example, by the third year of a four-year honours degree, students define and lead their own thematic project within an external real-world context. For some, this could mean a placement or study abroad. In the final year, students propose and direct their whole year of study under supervision, an approach to curriculum design that provides seamless acquisition of workplace skills and attributes.

Edinburgh College of Art, an early adopter of the employability agenda, has embedded opportunities for its students to develop career-ready skills and graduate attributes in three ways: through assessed learning outcomes, the design of learning activities and a student-centric approach to curriculum design.

For example, by the third year of a four-year honours degree, students define and lead their own thematic project within an external real-world context. For some, this could mean a placement or study abroad. In the final year, students propose and direct their whole year of study under supervision, an approach to curriculum design that provides seamless acquisition of workplace skills and attributes.

Abertay University - building digital and employability capabilities into the curriculum

While completing work placements, students of sports and exercise at Abertay University develop a range of additional skills and capabilities at the same time as improving their understanding of the curriculum by working on reflective e-portfolio-based tasks.

The assessment framework emphasises for students the importance of these e-portfolio elements – for example, 50% of the credits awarded on the second year placement module are for the webfolios students assemble while out on placement.

With such a weighting applied, students place value on assignments that enhance their employability by enabling them to develop the reflective and professional capabilities employers are looking for.

“It is only by integrating [e-portfolio] use in the curriculum and supporting that with 50% credit in the modules in which it is used that we made a difference to students’ capabilities as reflective professionals.”

–Andrea Cameron, dean of school of social and health sciences, Abertay University

While completing work placements, students of sports and exercise at Abertay University develop a range of additional skills and capabilities at the same time as improving their understanding of the curriculum by working on reflective e-portfolio-based tasks.

The assessment framework emphasises for students the importance of these e-portfolio elements – for example, 50% of the credits awarded on the second year placement module are for the webfolios students assemble while out on placement.

With such a weighting applied, students place value on assignments that enhance their employability by enabling them to develop the reflective and professional capabilities employers are looking for.

“It is only by integrating [e-portfolio] use in the curriculum and supporting that with 50% credit in the modules in which it is used that we made a difference to students’ capabilities as reflective professionals.”

–Andrea Cameron, dean of school of social and health sciences, Abertay University

Get involved

- Check your learning designs with our digital capability checklist for curriculum developers

- Encourage new ideas: try our digital capability activity cards

- Take a look at iDEA, The Duke of York Inspiring Digital Enterprise Award, a programme of badged awards designed to help you develop digital and enterprise skills

Watch our video from Digifest 2017 on digital skills:

Supporting guides

Quality

What you need to know

When designing or redesigning learning to take advantage of digital technologies, keep the following key questions in mind to ensure that your designs really do enhance your students’ achievement:

- What are your institution’s strategic aims for learning, teaching and assessment?

- What points for improvement have been identified in programme/module reviews or external inspection reports?

- What learning outcomes are you trying to achieve?

- In what context will the learning take place?

- What technologies are available to enhance learning?

- What support do you need?

- What do you already do well?

The answers to these questions provide the building blocks for your choice of approach - see the approaches to learning design section. Getting the mix right will enable you to deliver a high-quality learning and assessment experience.

Do not be surprised, however, if your design ‘template’ differs from context to context – one size does not fit all.

Why digital quality processes matter

Digital learning is increasingly embedded into quality assurance procedures. There are a number of reasons for this.

Large institutions, in particular those offering modular programmes or courses split between different campuses, including as a result of mergers, need to provide parity of experience for all students and staff – for example, by ensuring consistent, comprehensive access to high-quality digital learning that has been fully ‘thought through’, as outlined above.

The need to improve assessment has led some higher education institutions to standardise the credit value of modules. Some have set a maximum number of learning outcomes and assessments for a certain number of credits and many more are working to ensure information about assessment is easily accessible to students and tutors. However, achievement of this goal depends on efficient and effective processing of data about the curriculum – another way in which digital technologies impact on quality assurance.

Further education colleges are similarly working towards a standardised student experience by rolling out institution-wide technologies such as a new VLE, Office 365 or iPads. Such whole-scale change is often driven by the need to improve the quality of learning across all courses, but can only be achieved by embedding digital use into routine quality assurance and CPD processes.

What the experts say

"Quality matters - it is not about the percentage of online content but how effective the learning is."

–James Kieft, learning and development manager, Activate Learning

Be inspired: case studies

Manchester Metropolitan University - achieving a consistent student experience

In the course of one year, Manchester Metropolitan University, a multi-campus university, implemented a new curriculum framework that changed the size of undergraduate modules and set limits on the number of learning outcomes and assessments.

This resulted in the need for every undergraduate module to be rewritten, reviewed, approved and set up in supporting systems and processes. At the same time, new web and mobile technologies were introduced to ensure that all students received maximum benefit from the changed curriculum.

“The ambitious scope and timescale for the project required unprecedented innovation, excellent project management, support from the highest level, and the wholehearted engagement of almost every member of MMU.”

–Mark Stubbs, professor and head of learning and research technologies, Manchester Metropolitan University

Read the Manchester Metropolitan University report in full (pdf).

In the course of one year, Manchester Metropolitan University, a multi-campus university, implemented a new curriculum framework that changed the size of undergraduate modules and set limits on the number of learning outcomes and assessments.

This resulted in the need for every undergraduate module to be rewritten, reviewed, approved and set up in supporting systems and processes. At the same time, new web and mobile technologies were introduced to ensure that all students received maximum benefit from the changed curriculum.

“The ambitious scope and timescale for the project required unprecedented innovation, excellent project management, support from the highest level, and the wholehearted engagement of almost every member of MMU.”

–Mark Stubbs, professor and head of learning and research technologies, Manchester Metropolitan University

Read the Manchester Metropolitan University report in full (pdf).

Forth Valley College - embedding quality across a merged institution

Forth Valley College became the first regional college in Scotland when Falkirk College merged with Clackmannan College in 2005. With campuses in Alloa, Stirling and Falkirk, its catchment extends across a broad area of central Scotland.

To ensure quality across all its provision, the college has implemented a four-year plan across all campuses which focuses on one common theme – making learning work – and has embedded its learning and teaching goals into all quality assurance processes, from appraisal to continuing professional development (CPD).

“This mission has permeated the organisation like writing in a stick of rock. Everyone knows we are here ‘to make learning work.’”

–Ken Thomson, principal and chief executive, Forth Valley College

Forth Valley College became the first regional college in Scotland when Falkirk College merged with Clackmannan College in 2005. With campuses in Alloa, Stirling and Falkirk, its catchment extends across a broad area of central Scotland.

To ensure quality across all its provision, the college has implemented a four-year plan across all campuses which focuses on one common theme – making learning work – and has embedded its learning and teaching goals into all quality assurance processes, from appraisal to continuing professional development (CPD).

“This mission has permeated the organisation like writing in a stick of rock. Everyone knows we are here ‘to make learning work.’”

–Ken Thomson, principal and chief executive, Forth Valley College

University of Strathclyde - enhancing institutional responsiveness

The University of Strathclyde found that an online process for gathering curriculum information creates a single, accurate source of data that can enhance quality and approval processes. The introduction of the university’s centralised C-CAP system has also improved institutional responsiveness in a rapidly changing global market place.

“C-CAP is the first step in a move from a bureaucratically onerous design and approval process to one that is more efficient, effective and better placed to be responsive to the needs of stakeholders.”

–George MacGregor, institutional repository co-ordinator, University of Strathclyde

The University of Strathclyde found that an online process for gathering curriculum information creates a single, accurate source of data that can enhance quality and approval processes. The introduction of the university’s centralised C-CAP system has also improved institutional responsiveness in a rapidly changing global market place.

“C-CAP is the first step in a move from a bureaucratically onerous design and approval process to one that is more efficient, effective and better placed to be responsive to the needs of stakeholders.”

–George MacGregor, institutional repository co-ordinator, University of Strathclyde

Get involved

Mainly for further education

The Blended Learning Consortium’s collaborative funding model ensures high-quality online content for further education courses.

Dream

“Technology is changing the context for which we are designing. We need to design for our own new organisational context and for the world that the students are going out into.”

–Helen Beetham, consultant in higher education

What are you aiming for?

This section is about developing your vision for what learning, teaching and assessment could be like in your institution.

Asking 'what do my learners need to learn?' and 'what skills do they need to acquire to meet the learning outcomes for this lesson, course or module?' leads to considering how to achieve these outcomes in the most effective and inspiring way.

We look at how digital technologies can help you, and conclude that digital provides a far wider palette of tools and approaches to choose from. The advantages of this increased breadth are numerous.

Using digital tools, you can meet different learners’ preferences, be more flexible about the environment they learn in, widen the potential for interactions through online dialogue and social media – and simply make learning more active and engaging.

Whatever form your vision takes, using digital tools it can also be easily communicated, leading to more meaningful exchanges between learners, staff, employers and other stakeholders.

Strategy

What you need to know

This guide is about learning design rather than strategy development. It is, however, worthwhile considering the part a digital strategy plays in the achievement of good learning and assessment design.

If you are involved in developing or implementing a digital strategy, these are our tips for success:

- Base your strategy on a set of educational principles - see principles section

- Ensure your strategy is translated into policy and procedure and can be clearly followed through into practice – see quality section

- Align your strategy with other institutional strategies such as estates (see learning spaces section), IT (see infrastructure section), staff development (see staff development section), business growth and marketing in order to achieve institution-wide transformation

- Make best use of data to monitor the impact of your strategy – see learning analytics section

- Encourage innovation by establishing a supportive, blame-free culture

- Communicate your mission to all stakeholders in ways that are meaningful and transparent.

You can also read our quick guide on how to shape your digital strategy.

Why a strategic approach matters

With the challenges posed by the Teaching Excellence Framework (TEF) and the recommendations of the Further Education Learning Technology Action Group (FELTAG) report (pdf), a strategic approach is essential to steering your organisation towards successful digital practices.

Clear leadership from senior managers is the key. Without leadership and strategic direction, digital learning and assessment – and the benefits these can bring – are likely to only exist in dispersed ‘pockets of good practice’ rather than becoming the norm.

When implementing your strategy, aim for an open, supportive culture and instil a passion for good learning throughout the institution. Such a culture empowers teaching staff to experiment and provides the motivation to work through initial difficulties.

Recognising this, many institutional leaders now participate in digital training sessions alongside their staff to demonstrate their commitment to the organisation’s strategic goals and to unite everyone behind the common goal of providing a high-quality learning experience for all students.

What the experts say

“The digital student experience is not ‘one thing’ but is many faceted and born of complex and fluid relationships between different elements… Taking a strategic approach ensures the benefits of any interventions are realised.”

–Clare Killen, educational consultant

Read more in our guide to enhancing the student digital experience: a strategic approach.

Be inspired: case studies

Staffordshire University - a connected university

Staffordshire University’s new digital strategy aims to transform the institution into a 'connected university'. Under its influence, the culture is changing: embedded use of digital for online teaching and learning is becoming the norm, all academic staff have been issued with Surface Pro laptops and classrooms have been refurbished to better support digital learning and teaching. The new strategy has also turned the spotlight on designing whole courses rather than individual modules.

“It is clear that the new structure and strategy will embed technology in a much more strategic way.”

–Sue Lee, e-learning manager, Staffordshire University

Staffordshire University’s new digital strategy aims to transform the institution into a 'connected university'. Under its influence, the culture is changing: embedded use of digital for online teaching and learning is becoming the norm, all academic staff have been issued with Surface Pro laptops and classrooms have been refurbished to better support digital learning and teaching. The new strategy has also turned the spotlight on designing whole courses rather than individual modules.

“It is clear that the new structure and strategy will embed technology in a much more strategic way.”

–Sue Lee, e-learning manager, Staffordshire University

University of Northampton - becoming 'Waterside ready'

The move to the new Waterside campus has become the catalyst for a new strategic vision for learning and teaching based on active blended learning (ABL) at the University of Northampton.

ABL involves student-centred, participative learning experiences in which blended learning and student-led activities outside the classroom are as much the norm as classroom-based activities.

“ABL is our new normal. A module that makes effective use of ABL is a ‘Waterside ready’ module. ABL is not something we do in addition to our regular teaching duties: it is our standard approach to learning and teaching.”

–Professor Alejandro Armellini, dean of learning and teaching, University of Northampton

The move to the new Waterside campus has become the catalyst for a new strategic vision for learning and teaching based on active blended learning (ABL) at the University of Northampton.

ABL involves student-centred, participative learning experiences in which blended learning and student-led activities outside the classroom are as much the norm as classroom-based activities.

“ABL is our new normal. A module that makes effective use of ABL is a ‘Waterside ready’ module. ABL is not something we do in addition to our regular teaching duties: it is our standard approach to learning and teaching.”

–Professor Alejandro Armellini, dean of learning and teaching, University of Northampton

South West College (SWC), Northern Ireland - changing strategic direction

Senior managers at SWC recognised the benefit to the college of offering education and training beyond the home region, and so began a strategic drive to extend the college’s provision through distance learning.

SWC is now on course to achieve a proportion of online delivery and assessment on all face-to-face courses. In 2016, 34 hours out of a total of 600 guided learning hours for each learner was delivered online and approximately 60 % of all vocational courses had some form of online assessment.

“Teaching staff, external verifiers and students all benefit from being able to learn and be assessed off site. Tracking student performance is automated, enabling staff to monitor and respond to at-risk students.”

–Ciara Duffy, virtual services manager, South West College

Senior managers at SWC recognised the benefit to the college of offering education and training beyond the home region, and so began a strategic drive to extend the college’s provision through distance learning.

SWC is now on course to achieve a proportion of online delivery and assessment on all face-to-face courses. In 2016, 34 hours out of a total of 600 guided learning hours for each learner was delivered online and approximately 60 % of all vocational courses had some form of online assessment.

“Teaching staff, external verifiers and students all benefit from being able to learn and be assessed off site. Tracking student performance is automated, enabling staff to monitor and respond to at-risk students.”

–Ciara Duffy, virtual services manager, South West College

Portsmouth College - taking the digital plunge

In 2014, Portsmouth College launched its personal learning strategy. The timetable was radically changed and all full-time 16-18 year-old students were provided with iPad Minis to make use of the increased independent learning hours.

Combined with redesigned learning spaces and high density wifi across the campus, this stratagem has given everyone at Portsmouth College the same opportunities for anytime, anywhere learning. The college has since seen a 27% increase in student numbers.

“Rather than keeping technology on the periphery of teaching and learning, we decided to go digital to the core.”

–Simon Barrable, deputy principal, Portsmouth College

In 2014, Portsmouth College launched its personal learning strategy. The timetable was radically changed and all full-time 16-18 year-old students were provided with iPad Minis to make use of the increased independent learning hours.

Combined with redesigned learning spaces and high density wifi across the campus, this stratagem has given everyone at Portsmouth College the same opportunities for anytime, anywhere learning. The college has since seen a 27% increase in student numbers.

“Rather than keeping technology on the periphery of teaching and learning, we decided to go digital to the core.”

–Simon Barrable, deputy principal, Portsmouth College

Get involved

- Join our digital leadership training programme

- Take up our consultancy offer for targeted support, for example, for a digital strategy review

Read more

Principles

What you need to know

Educational principles can be a vital element of any strategy for learning, teaching and assessment.

Principles are a way of articulating your shared educational values as a college or university. They also provide a benchmark against which everyone can check their progress towards your organisation’s strategic mission for enhanced learning, teaching and assessment.

As a result, a set of principles is often built into organisational strategies.

Why principles matter

Principles of good practice unite all staff – academics, tutors, learning technologists, support staff as well as those responsible for quality assurance and administration – in working together towards a common goal.

Professor David Nicol argues that principles:

- Provide a common language

- Provide a reference point for evaluating change in the quality of educational provision

- Summarise and simplify the research evidence for those who don't have time to read all the research literature

- Help put important ideas into operation

Where improvement is required, having such benchmarks of good practice can help you move forward with common agreement on what is fundamentally important.

And, as the basics of good pedagogy are not always widely understood, adopting or writing your own set of principles can also be an effective way of communicating your mission. Activities based around defining principles can also help staff envisage where digital technologies can make a difference.

What the experts say

“Principles capture an important idea while at the same time they point to implementation. For example, good feedback practice should 'encourage interaction and dialogue around learning' captures the idea that feedback is a dialogical process and suggests that this should be encouraged by teachers through their task or course designs.”

–David Nicol, emeritus professor of higher education, University of Strathclyde

Read more in 'why use assessment and feedback principles?' from Strathclyde's Re-Engineering Assessment Practices (REAP) project.

Be inspired: case studies

Glasgow Caledonian University - setting principles for curriculum design

Glasgow Caledonian University links its approach to curriculum design to a clear set of principles which are defined in the university’s strategy for learning 2015-2020. The eight curriculum design principles are embedded across all programmes at undergraduate and postgraduate level.

“[Our] strategy for learning has been developed through a consultative process with staff, students, college partners and employers and is informed by international and national developments and effective practice in learning, teaching and assessment.”

–Glasgow Caledonian University strategy for learning 2015-2020

Glasgow Caledonian University links its approach to curriculum design to a clear set of principles which are defined in the university’s strategy for learning 2015-2020. The eight curriculum design principles are embedded across all programmes at undergraduate and postgraduate level.

“[Our] strategy for learning has been developed through a consultative process with staff, students, college partners and employers and is informed by international and national developments and effective practice in learning, teaching and assessment.”

–Glasgow Caledonian University strategy for learning 2015-2020

Sheffield Hallam University - looking through different lenses

Sheffield Hallam University has developed a number of ‘design lenses’ for topics to consider when designing good learning and teaching practice. The lenses have been captured on a series of cards for use in learning design activities.

View the resources below:

Sheffield Hallam University has developed a number of ‘design lenses’ for topics to consider when designing good learning and teaching practice. The lenses have been captured on a series of cards for use in learning design activities.

View the resources below:

Activate Learning - a learning philosophy for further education

Activate Learning is an education and training group based in Oxfordshire and Berkshire. The Group places a high value on its philosophy for learning which is designed to help staff understand better what will work for them and their students. A robust, up-to-date digital environment is seen as an important means of putting the philosophy into action.

“Helping our students learn and achieve their best is at the heart of everything we do. We have developed a unique learning philosophy focused on brain, emotions and motivation to empower our learners on their journey to success.”

–Activate Learning

Activate Learning is an education and training group based in Oxfordshire and Berkshire. The Group places a high value on its philosophy for learning which is designed to help staff understand better what will work for them and their students. A robust, up-to-date digital environment is seen as an important means of putting the philosophy into action.

“Helping our students learn and achieve their best is at the heart of everything we do. We have developed a unique learning philosophy focused on brain, emotions and motivation to empower our learners on their journey to success.”

–Activate Learning

Get involved

- View the Queen's University Belfast cards, which link its assessment principles to a range of technologies

- View Activate Learning’s learning cycle and try out a short module on its application

Further reading

- Nicol, D. (2007), principles of good assessment and feedback: theory and practice

- Russell, M. and Davies, S. (2011), some principles relating to effective assessment and feedback

Approaches to learning design

"We should be aware of the pedagogies that are possible as much as we are aware of the affordances of the technology. The two together make up technology-enhanced learning. They have got to be well formed partners that align completely with one another."

–Peter Shukie, education studies course leader, Blackburn College

This element of our guide offers a brief account of the types to learning that you as learning designers might find useful. Curriculum design and support for online learning, provides a more detailed introduction to models and theories of learning.

What you need to know

There is no single type of pedagogic approach you are advised to take when designing learning with digital technologies. We take the view that certain approaches to learning activity are suited to achieving certain approaches to learning outcome, and that it is equally important to select the right digital tools to support your aims.

Achieving the right mix of learning activities, tools and technologies for each unit of learning is the art of the learning designer.

Active learning

Active learning requires students to participate in their learning rather than being passive recipients of others’ knowledge, as occurs in traditional lectures or taught classes.

The advent of technology has done much to promote active learning by getting students to do a greater range of things (research, practice, discuss, present, self-assess, publish online) and by encouraging reflection about what they have done (e-portfolios, blogs, online debate or chat).

Find out more

Read University College London’s toolkit for active learning (pdf).

Active learning requires students to participate in their learning rather than being passive recipients of others’ knowledge, as occurs in traditional lectures or taught classes.

The advent of technology has done much to promote active learning by getting students to do a greater range of things (research, practice, discuss, present, self-assess, publish online) and by encouraging reflection about what they have done (e-portfolios, blogs, online debate or chat).

Find out more

Read University College London’s toolkit for active learning (pdf).

Authentic learning

This model of learning is also based around the belief that learning should be active, practical and challenging rather than theoretical and passive. And as before, digital tools can play a significant role by enabling ‘close-to-real’ learning experiences in disciplines as diverse as automotive engineering and medicine.

With the focus firmly on curricula that are responsive to the needs of the labour market, authentic learning based on simulation software, virtual worlds and gaming technologies and interactive role play in online learning environments will play an increasingly important role in the future.

Find out more

Read our case study on the University of Greenwich's virtual law clinic (pdf) and our case study on S&B Autos Automotive Academy, Bristol (pdf).

This model of learning is also based around the belief that learning should be active, practical and challenging rather than theoretical and passive. And as before, digital tools can play a significant role by enabling ‘close-to-real’ learning experiences in disciplines as diverse as automotive engineering and medicine.

With the focus firmly on curricula that are responsive to the needs of the labour market, authentic learning based on simulation software, virtual worlds and gaming technologies and interactive role play in online learning environments will play an increasingly important role in the future.

Find out more

Read our case study on the University of Greenwich's virtual law clinic (pdf) and our case study on S&B Autos Automotive Academy, Bristol (pdf).

Blended learning

Blended learning is a combination of face-to-face activities and digital tools and resources designed to deliver the best possible learning experience.

The use of learning tools can occur before, during or after a face-to-face session and support a variety of pedagogic purposes. The blended component, for example, might aim to extend the time students spend on task, develop their information literacy skills, stimulate their interest before a class, or enable them to work at their own pace afterwards.

The term suggests careful and deliberate integration of online and face-to-face activities.

More general terms used to describe the use of technology in learning include 'technology-enhanced learning', ‘e-learning’ and ‘ILT’ (information learning technology).

Find out more

- Embedding blended learning in further education and skills

- Developing blended learning content approaches

- FutureLearn: blended learning essentials courses: getting started, embedding practice and developing digital skills

Watch the Ufi Charitable Trust video on how blended learning can help your students.

Blended learning is a combination of face-to-face activities and digital tools and resources designed to deliver the best possible learning experience.

The use of learning tools can occur before, during or after a face-to-face session and support a variety of pedagogic purposes. The blended component, for example, might aim to extend the time students spend on task, develop their information literacy skills, stimulate their interest before a class, or enable them to work at their own pace afterwards.

The term suggests careful and deliberate integration of online and face-to-face activities.

More general terms used to describe the use of technology in learning include 'technology-enhanced learning', ‘e-learning’ and ‘ILT’ (information learning technology).

Find out more

- Embedding blended learning in further education and skills

- Developing blended learning content approaches

- FutureLearn: blended learning essentials courses: getting started, embedding practice and developing digital skills

Watch the Ufi Charitable Trust video on how blended learning can help your students.

Flipped learning

Flipped learning reverses the traditional sequence of delivery by a teacher followed by reinforcement activities for students. It is sometimes called 'upside down pedagogy' or 'just in time' teaching.

In a flipped approach, students engage with selected resources – usually learning content or quizzes on the VLE – before joining a virtual or face-to-face session during which they deepen their understanding of the topic.

Flipped learning reverses the traditional sequence of delivery by a teacher followed by reinforcement activities for students. It is sometimes called 'upside down pedagogy' or 'just in time' teaching.

In a flipped approach, students engage with selected resources – usually learning content or quizzes on the VLE – before joining a virtual or face-to-face session during which they deepen their understanding of the topic.

Inquiry-based learning

Inquiry-based learning (IBL) is based on the investigation of questions, scenarios or problems. Teachers as facilitators help students identify issues to research to develop their knowledge and problem-solving ability. Inquiry-based learning, as a result, may contain elements of problem-based learning.

Inquiry-based learning can also form part of research-led or problem-based learning. Both approaches to learning design allow students to further their understanding of their discipline by undertaking relevant challenges and/or carrying out research of their own.

Inquiry-based and problem-based learning both entail students selecting the research methodologies and digital tools that best suit their purpose. It goes without saying that students learning this way will need a range of digital capabilities (see section on digital capability and employability).

Find out more

Inquiry-based learning (IBL) is based on the investigation of questions, scenarios or problems. Teachers as facilitators help students identify issues to research to develop their knowledge and problem-solving ability. Inquiry-based learning, as a result, may contain elements of problem-based learning.

Inquiry-based learning can also form part of research-led or problem-based learning. Both approaches to learning design allow students to further their understanding of their discipline by undertaking relevant challenges and/or carrying out research of their own.

Inquiry-based and problem-based learning both entail students selecting the research methodologies and digital tools that best suit their purpose. It goes without saying that students learning this way will need a range of digital capabilities (see section on digital capability and employability).

Find out more

Online learning

Online learning has long been associated with distance learning – now largely a technology-supported mode of learning which enables students to study for qualifications without attending classes.

More and more colleges and universities have used online learning to broaden their customer base and reduce their fixed costs. And increasingly, today’s time-poor and economically challenged students have been attracted by the flexibility of online distance learning.

This mode of delivery can also enable an institution to maintain choice for students by offering low-enrolment courses or modules online.

However, the ubiquity of access students have to the internet can mask how fundamentally different an experience a wholly online model of learning can be. Both students and staff need support and training to gain the most from this mode of learning.

In general, it is best to be led by pedagogic purpose rather than the availability and appeal of particular technologies when deciding which approach to learning to adopt – see the section on principles.

Nonetheless, partial or wholly online learning increases learner responsibility and control. Students learning online develop habits of self-regulation and digital skills that support their lifelong learning.

Supporting guides

Online learning has long been associated with distance learning – now largely a technology-supported mode of learning which enables students to study for qualifications without attending classes.

More and more colleges and universities have used online learning to broaden their customer base and reduce their fixed costs. And increasingly, today’s time-poor and economically challenged students have been attracted by the flexibility of online distance learning.

This mode of delivery can also enable an institution to maintain choice for students by offering low-enrolment courses or modules online.

However, the ubiquity of access students have to the internet can mask how fundamentally different an experience a wholly online model of learning can be. Both students and staff need support and training to gain the most from this mode of learning.

In general, it is best to be led by pedagogic purpose rather than the availability and appeal of particular technologies when deciding which approach to learning to adopt – see the section on principles.

Nonetheless, partial or wholly online learning increases learner responsibility and control. Students learning online develop habits of self-regulation and digital skills that support their lifelong learning.

Supporting guides

Research-led learning

Research-led learning places students in the role of researchers to enable them to experience how knowledge is developed and applied in their discipline.

Research-led students work in partnership with their lecturers to construct new forms of knowledge and to deepen their understanding.

This enables students even at undergraduate level to become immersed in the practices of their discipline.

Find out more

Research-led learning places students in the role of researchers to enable them to experience how knowledge is developed and applied in their discipline.

Research-led students work in partnership with their lecturers to construct new forms of knowledge and to deepen their understanding.

This enables students even at undergraduate level to become immersed in the practices of their discipline.

Find out more

Team-based learning

Team-based learning is a specific approach that can involve flipped learning and elements of inquiry/problem-based learning.

Students work in the same group over a period of time to collectively develop their understanding of core concepts and to solve problems. As in flipped learning, students engage with learning materials in advance of face-to-face sessions.

Multiple-choice questions used at the start of each session then test the knowledge of both the individual and the team. Tutors can give students immediate feedback in a type of formative assessment having assessed their readiness to move on.

Working in teams in this way helps tutors assess individuals’ progress but also enables students to develop a range of transferable skills.

Find out more

Team-based learning is a specific approach that can involve flipped learning and elements of inquiry/problem-based learning.

Students work in the same group over a period of time to collectively develop their understanding of core concepts and to solve problems. As in flipped learning, students engage with learning materials in advance of face-to-face sessions.

Multiple-choice questions used at the start of each session then test the knowledge of both the individual and the team. Tutors can give students immediate feedback in a type of formative assessment having assessed their readiness to move on.

Working in teams in this way helps tutors assess individuals’ progress but also enables students to develop a range of transferable skills.

Find out more

Work-based learning

Work-based learning bridges the gap between formal education and training and employment by enabling students to achieve learning outcomes that are not normally possible in educational contexts. These include the acquisition of workplace skills and practices that advance their progress towards a chosen career. Work-based learning can also encompass apprenticeships (see apprenticeships section).

Depending on the qualification and the nature of the workplace, the institution’s role will vary from providing mentorship and tutorial functions to having full responsibility for students on work placements as part of a course. Thus a work-based learning model will necessarily entail close partnership working with employers over learning outcomes, course content and assessment.

Work-based learning bridges the gap between formal education and training and employment by enabling students to achieve learning outcomes that are not normally possible in educational contexts. These include the acquisition of workplace skills and practices that advance their progress towards a chosen career. Work-based learning can also encompass apprenticeships (see apprenticeships section).

Depending on the qualification and the nature of the workplace, the institution’s role will vary from providing mentorship and tutorial functions to having full responsibility for students on work placements as part of a course. Thus a work-based learning model will necessarily entail close partnership working with employers over learning outcomes, course content and assessment.

Digital by design

What you need to know

Throughout this guide we emphasise the need to put pedagogy first when designing learning and assessment activities.

In this section, we look at how to ensure that best use is made of the digital tools at your disposal to support the pedagogic goals and approaches to learning you have identified as appropriate.

In learning that is truly digital by design, students have an enhanced set of learning experiences and can move seamlessly between physical and virtual environments that are supportive, stimulating, engaging, challenging and inspiring.

This is what you are aiming for!

Where to start

Here are some tips for effective design - adapted from the University of Northampton's report, overcoming barriers to student engagement with Active Blended Learning (pdf):

- Ensure there is an explicit relationship between online components of the course and face-to-face sessions

- Connect face-to-face and online components so that face-to-face sessions use the outputs of online components or vice versa

- Ensure that staff mediate online activities

- Avoid repetition of content in the classroom that has already appeared online and vice versa

- Progressively increase digital and cognitive skill requirements over time

- Clarify the value of online activities and the skills learned, for example in relation to employability

- Vary the tools and types of activity to avoid doing the same thing and overemphasising particular tools

- The tool, the task or the knowledge can be new and challenging but avoid all three at the same time!

What the experts say

“Our schemes of work require the integration of online and face-to-face to be clearly articulated on each course to avoid the two components being treated as separate.” Peter Kilcoyne, director of ILT, Heart of Worcestershire College

Read more about the Blended Learning Consortium led by Heart of Worcestershire College.

Be inspired: case studies

Hartpury College - combining digital and traditional practice

Hartpury College is a land-based college with some 4000 students and 500 staff. The college has a mission to embed digital learning across the whole of its provision.

Under a newly devised ‘Take 20’ model, individuals or small groups of staff can book a 20-minute slot with the blended learning team to obtain personalised guidance on the right pedagogy and digital tools for their needs.

To help staff understand better how digital fits into good classroom-based teaching and learning, Hartpury has also helped produce a SCORM-compliant online training package for staff: Planning for Quality Online Learning.

"Digital learning should not replace classroom-based practice but be an extension of it, enabling learners to move seamlessly in and out of digital and classroom environments."

–Andy Beddoe, blended learning manager, Hartpury College

Hartpury College is a land-based college with some 4000 students and 500 staff. The college has a mission to embed digital learning across the whole of its provision.

Under a newly devised ‘Take 20’ model, individuals or small groups of staff can book a 20-minute slot with the blended learning team to obtain personalised guidance on the right pedagogy and digital tools for their needs.

To help staff understand better how digital fits into good classroom-based teaching and learning, Hartpury has also helped produce a SCORM-compliant online training package for staff: Planning for Quality Online Learning.

"Digital learning should not replace classroom-based practice but be an extension of it, enabling learners to move seamlessly in and out of digital and classroom environments."

–Andy Beddoe, blended learning manager, Hartpury College

Heart of Worcestershire College - blended learning brings organisational benefits

The FELTAG report (pdf) challenged the further education and skills sector to achieve a 10% wholly online component in all programmes starting from September 2015.

The Heart of Worcestershire College’s Scheduled Online Learning and Assessment (SOLA) approach means the college met the FELTAG target in 2016 and is approaching 20% in a number of key areas. The SOLA model, which has been applied to all level 2 and 3 full-time programmes of study, is bringing cost benefits to the college as well as learning gains for students.

“This model has had a positive impact within the college allowing efficiency savings of over £200k per year. Success rates in the college have improved by over 11% since blended learning was first implemented.”

–Peter Kilcoyne, director of ILT, Heart of Worcestershire College

The FELTAG report (pdf) challenged the further education and skills sector to achieve a 10% wholly online component in all programmes starting from September 2015.

The Heart of Worcestershire College’s Scheduled Online Learning and Assessment (SOLA) approach means the college met the FELTAG target in 2016 and is approaching 20% in a number of key areas. The SOLA model, which has been applied to all level 2 and 3 full-time programmes of study, is bringing cost benefits to the college as well as learning gains for students.

“This model has had a positive impact within the college allowing efficiency savings of over £200k per year. Success rates in the college have improved by over 11% since blended learning was first implemented.”

–Peter Kilcoyne, director of ILT, Heart of Worcestershire College

Grimsby Institute and University Centre - embedding learning technologies

Believing that one of the most important roles for a college or university is preparing students for the workplace, Grimsby Institute has invested in virtual reality technology to bring a lifelike dimension to learning on a range of post-16 courses.

Membership of Jisc has helped the Institute find the right way to improve student engagement and boost attainment through a targeted selection of learning technologies. This has been followed up by guidance and support giving students and staff the confidence and digital skills to take full advantage of new technology.

Believing that one of the most important roles for a college or university is preparing students for the workplace, Grimsby Institute has invested in virtual reality technology to bring a lifelike dimension to learning on a range of post-16 courses.

Membership of Jisc has helped the Institute find the right way to improve student engagement and boost attainment through a targeted selection of learning technologies. This has been followed up by guidance and support giving students and staff the confidence and digital skills to take full advantage of new technology.

Get involved

Get Interactive: Practice Teaching with Technology, the Bloomsbury Learning Environment’s free massive open online course (MOOC) takes you through some of the technologies that educators use to make their learning engaging, interactive and dynamic.

During 2014-2015, the Learning Futures programme commissioned 17 projects to develop CPD resources for staff, leaders and governors to increase skills and confidence with learning technologies. All resources are free for you to use and adapt for your own purposes.

Supporting guides

Visioning success

What you need to know

Now you have embarked on designing for digital, how will you know you have achieved successful outcomes? Is success just about course completion?

In other parts of this guide, we look at how you develop good practice in digital learning and assessment. Here we invite you to envisage what success looks like.

There is overlap with the topics of digital capability and employability as we ask what qualities you would like your students to have as a result of studying at your organisation.

Why this matters

It is important to consider the impact of digital learning designs on students and to discuss with them what they will gain from the models of learning you have adopted.

Many students are challenged by the level of autonomy expected of them at college or university and some are unclear what they are achieving through independent learning. Equally, we can learn a lot from the strategies and approaches students have discovered for themselves.

Creating a dialogue around the theme of what successful digital learning looks like, and linking this to outcomes such as future employability, can address this issue. Defining success will also assist you in future curriculum planning.

What the experts say

“Increasingly, learners expect their digital skills to be a resource for getting on in life, and getting an education. They have innovative learning habits of their own, and they have creative ideas about how educators could better support them. Through stories like these we are learning to listen.”

–Helen Beetham, consultant in higher education

Read key themes from our digital student/learner stories (pdf).

University of Northampton - changemakers of the future

The University of Northampton has created a framework of attributes its graduates should expect to achieve as a result of their studies. In 2013, the university was recognised by Ashoka UK as a ‘Changemaker Campus’—a designation that reflects its commitment to catalysing positive social change through:

- Change: doing the right things

- Self-direction: in the right way

- Collaboration: with the right people

- Positive work ethic, integrity and values: for the right reasons

The model is supported by a toolkit with practical guidance on applying the framework at different levels of learning.

The University of Northampton has created a framework of attributes its graduates should expect to achieve as a result of their studies. In 2013, the university was recognised by Ashoka UK as a ‘Changemaker Campus’—a designation that reflects its commitment to catalysing positive social change through:

- Change: doing the right things

- Self-direction: in the right way

- Collaboration: with the right people

- Positive work ethic, integrity and values: for the right reasons

The model is supported by a toolkit with practical guidance on applying the framework at different levels of learning.

Hartlepool College - it's not just about the qualification

Hartlepool College promotes the icould free-to-use careers website on social media to open up a dialogue with students about transitioning from learning into work.

With over 1000 video interviews available on the website, students not only gain an insight into different professions and jobs, they also discover what skills, qualifications and attributes they need to acquire while on course – and are able to assess their own attributes in relation to featured careers.